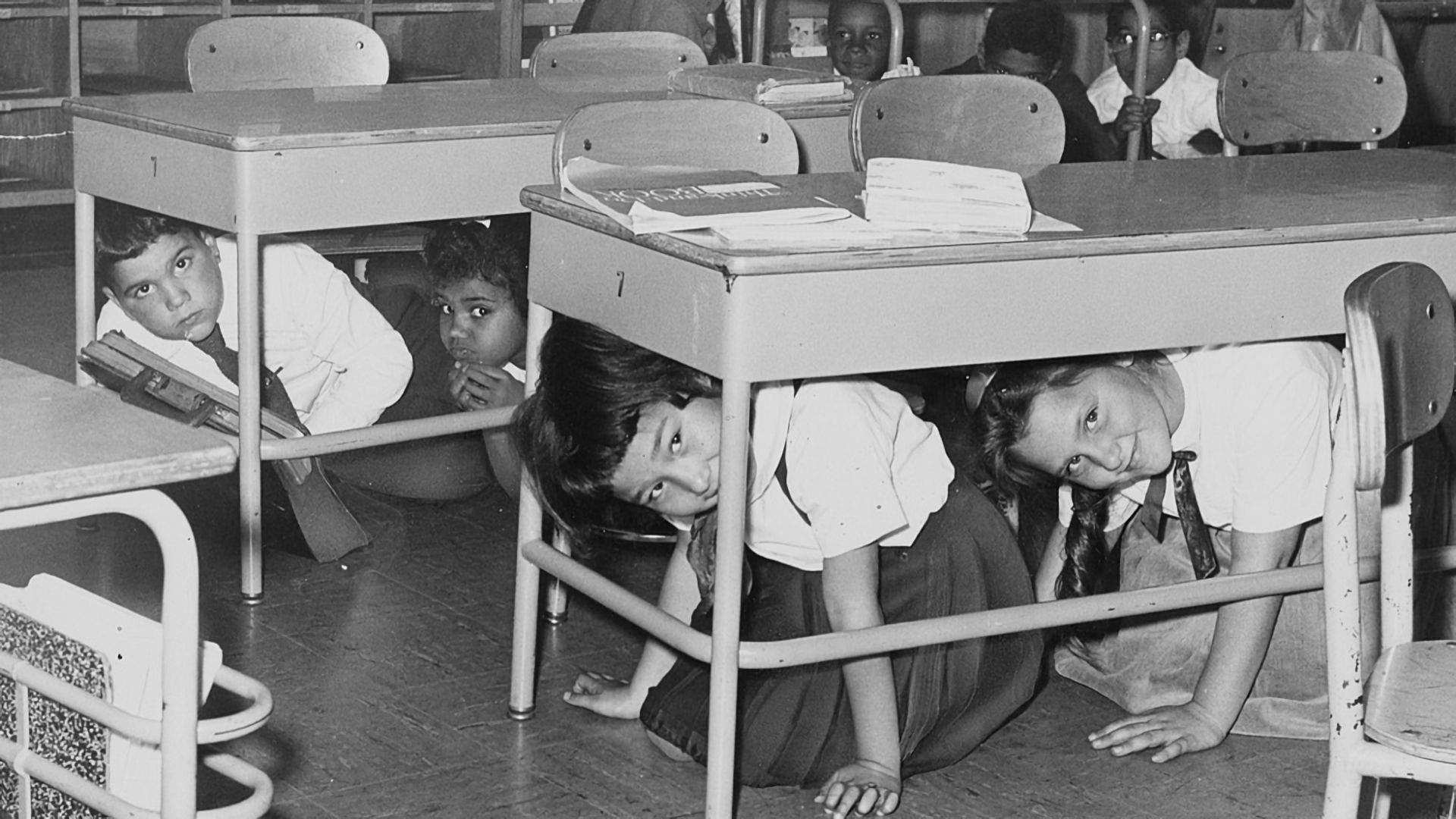

I was born in 1947, so my first real memories of the nuclear age were duck-and-cover drills in grade school, and the chill of the Cuban missile crisis when I was fifteen remains a vivid memory.

By Gregory F. Treverton

Note: The views expressed here are those of the author and do not necessarily represent or reflect the views of SMA, Inc.

When I grew up, though, I had the chance to both work and reflect on nuclear weapons, and the good fortune to encounter some of the men—alas, there were too few women then—who had built and, more important for me, laid the basis for thinking about the nuclear revolution in world affairs, what Robert Oppenheimer quoted the Bhagavad Gita in describing after the first atomic test: “Now I am become Death, the destroyer of worlds.”

Enemies and Armageddon

In retrospect, probably the most dangerous years of the nuclear era were those when I was still a toddler. The Soviet Union built its first A-bomb sooner than the United States expected, but the US military services were still inclined to view atomic, then nuclear weapons as a cheap equalizer: witness the “New Look” of 1953, the “New New Look” of 1956, and the accompanying adoption by the Army of pentomic divisions. All the innovations sought to use tactical nuclear weapons to offset the presumed Soviet advantage in conventional military power on the central front in Europe.

Rivalry between the services was intense; the pentomic divisions, for example, were the Army’s effort to stay in the game as nuclear funding flowed to the Air Force and Navy. One of my mentors, and later colleague, Ernest May recalled doing a history of the US–Soviet nuclear competition. Much more often than once, his interlocutor would, in a Freudian slip, substitute “Air Force” or “Navy” for “Soviet Union” in describing the competition. A few years ago, I had the chance, in a bit of classified tourism, to visit the Defense Threat Reduction Agency’s museum of US nuclear weapons at the Sandia National Laboratories in New Mexico. The range of zany ideas, and the competition between the services, is hard to imagine, extending almost literally to a nuclear bomb in a knapsack (what a serene hike that would have been!). No doubt the Soviets had just as many crackpot ideas. I came away thinking: “boy, were we lucky we didn’t blow ourselves up.”

Why we didn’t blow up the planet owes in great measure, I have come to realize, to those early thinkers about “the destroyer of worlds.” In his 1945 memorandum, “The Atomic Bomb and American Security,” published by the Yale Institute of International Studies, Bernard Brodie prefigured in a few pages most of what we later understood about nuclear weapons.[1] Once nuclear fission was discovered, in 1939, nuclear weapons were inevitable. Those nuclear weapons were not just the latest in the list of innovations in ways of war, rather they “threaten[ed] to make the rest of the list relatively unimportant.” Moreover, they gave a permanent advantage to the offense for one simple reason: rockets might move but cities don’t; they are forever vulnerable. Thus, the quest to fashion nuclear strategies—and later arms control arrangements—to deter that first strike, a quest that culminated in “mutual assured destruction” (MAD). That was summarized in the hoary maxim of Cold War nuclear deterrence: “defense is offense, killing people is good, killing weapons is bad.” The logic was clear if ugly: if no first strike from either side could render it invulnerable to a second strike from the enemy, there would be no incentive to strike first.

All these nuclear tergiversations, as William Buckley might have put it, stem from the central conundrum: if ordering a nuclear first strike to kill, perhaps, millions of people was all but insane, so was retaliating to that strike with one equally destructive. On the possibility of a Soviet first strike, we used to joke about the Soviet general whose toaster burnt his breakfast toast before he arrived at headquarters to find the elevator out of order. He then proceeded to walk up seven flights of stairs to order a nuclear first strike on the United States!

Given the madness of MAD, the architects of nuclear strategy exercised all manner of intellectual gymnastics. Herman Kahn built ladders of escalation to try to insure that even if nuclear weapons were used, the conflict might stop short of Armageddon. To sustain credibility, others imagined second-strike threats that were, if not automatic, at least left something to chance: much as New York drivers give cabbies wide berth knowing they will drive aggressively, so, too, a little madness might make MAD second-strike threats more credible.

I didn’t ever know Brodie or Kahn, but I was lucky enough to cross paths with other of the nuclear pioneers—Albert Wohlstetter, Harry Rowen, Henry Kissinger, and, especially, Thomas Schelling, whose The Strategy of Conflict ought to be required reading for anyone tempted to talk about strategy, and not just nuclear strategy.[2] Tom taught me graduate economics and was later my faculty colleague and fellow gym rat; at more than one gym I’ve quoted Tom that the best thing about working out was that at any time you were never more than a minute from being finished—with that exercise at least. I’ve known people whose minds brought clarity to thinking laterally, but Tom was in a class by himself in my experience at thinking deeply. Just when you thought there could be no further insight by digging another level down, he would articulate one.

Allies and Reassurance

Most of my own nuclear work dealt with allies, with what came to be called “extended deterrence.” There, the conundrum deepened: if nuclear threats to protect the homeland from nuclear attack were barely credible, how could those threats possibly be made credible to protect other nations, including from conventional attack—that threat of the Red Army pouring into western Europe? How could the United States and NATO credibly threaten to use nuclear weapons first? In trying to answer that question, we performed intellectual gymnastics of our own.

Perhaps using weapons with less collateral damage would be more credible: hence the idea of a “neutron bomb,” one with less blast and more radiation. In the mid-1970s, we prepared a line for our boss, National Security Advisor Zbigniew Brzezinski, to use at a Gridiron dinner in Washington, which fortunately he had the good sense not to use: “Harold Brown [the secretary of Defense] has a new bomb, and it’s a real bomb. It kills the opposing team and the spectators but leaves the stadium intact.” At the same time, we were peddling “depleted uranium” (DU) rounds to our allies; these were better tank penetrators than conventional rounds. As good National Security Council staffers, we sometimes carried a bullet to show—but only in our back pockets!

Most of the time, though, our task was to maintain the cohesion of the NATO alliance, often called “coupling.” Good ideas were bad if they risked decoupling Europe from the United States. Mr. Reagan’s vaunted “Star Wars” was a case in point, for it boded to produce the equivalent of a dome protecting the United States—but not its allies—from nuclear attack.

To be sure, there was always considerable theology to these discussions. A sharp-eyed reader, for instance, might notice that instead of shared US and European nuclear vulnerability, if the United States homeland were less vulnerable to nuclear attack, it could be argued that should have made it more, not less, credible that Washington would carry out NATO strategy by threatening a nuclear riposte to a conventional Red Army attack on western Europe.

The big alliance issue of the 1970s was intermediate nuclear forces, or INF. The logic again grew out of ladders of escalation and decoupling: the Soviets were deploying INF, the SS‑20s, while the US and NATO had nothing of comparable range. If the Soviets threatened to escalate a war in Europe from battlefield nuclear weapons to SS‑20 strikes on western Europe, and the only US counter was an escalation to strategic nuclear weapons launched from the United States, would that be credible? Wasn’t it necessary to have nuclear weapons based in Europe with range enough to threaten the Soviet Union?

By the late 1970s I was out of government but had become convinced that the answer was “yes.” I had trouble, though, persuading the august journal, Foreign Affairs, that the issue was even worth an article.[3] In the end, NATO also decided the answer was yes, and set about, over considerable street protest in Europe, to deploy INF in Europe. It was spared doing so by the unlikely agreement of Ronald Reagan and Mikhail Gorbachev. Reagan came up with the “zero option” for INF more or less on his own, so far as I can tell. Surely it was not something we experts would have thought respectable: after all, the Soviets had INF missiles while we only had INF plans. It took a politician to say zero is zero, thus fair to both sides. The result was the INF treaty of 1987, which banned INF and spared NATO the political misery of deploying them.

In the early 80s, living in London, I twice found myself at meetings of eminent nuclear physicists, organized by Italy’s then pre-eminent physicist, Antonino Zichichi, at a delightful crusader village, Erice, in the Sicilian hills. The group was a who’s who of nuclear physics, including Eugene Wigner, Edward Teller, Paul Dirac, Pytor Kapitsa, Richard Garwin, and others. If the group was eminent, the conversations were often appalling. The physicists would opine about nuclear weapons in ways that would have earned failing grades for their graduate students if said about nuclear physics. And there were intermarriages among the group, so often when I’d talk to one, another’s wife would come and whisper: “what did he say?” It was like being in a sandbox with very smart people.

In retrospect, it strikes me that the first years of the nuclear era were ones of strategic ambiguity, as the world struggled to discern what these destroyers of worlds meant for the nature of warfare, especially on the battlefield. By the middle of the 1970s or so there was more strategic clarity: the nuclear world was bipolar, and the goal was to render any strategic first strike incredible. That was the era of the arms control agreements—from SALT I and SALT II to the INF treaty, and importantly including the anti-ballistic missile (ABM) treaty, which limited nuclear defenses on the logic of the MAD maxim that “defense was offense” if those defenses threatened the survivability of the other side’s second-strike option.

Now, I fear we are entering another period of strategic ambiguity. China’s nuclear forces are too capable to give that country a bye in any nuclear negotiations. Russia (and Pakistan) have adopted a “some first use” nuclear doctrine not unlike NATO’s during the Cold War. North Korea is now a nuclear power, and Iran is perhaps not far behind. Nuclear proliferation is changing in character when Saudi Arabia might buy a bomb—or even seek prestige by pretending it has one when it doesn’t—while Japan and South Korea might simply remind the world that they could build nuclear weapons in short order if they so chose. In those circumstances, we may be tempted to feel that MAD was not so bad.

[1] Bernard Brodie, “The Atomic Bomb and American Security,” in Bobbitt, P., Freedman, L., Treverton, G.F. (eds) US Nuclear Strategy, 1989. Palgrave Macmillan, London. doi.org/10.1007/978-1-349-19791-0_7. This volume includes the major ideas and documents of Cold War nuclear history.

[2] Harvard University Press, 1981. The quote is from p. 65.

[3] In the end, the editor, William Bundy, decided it was. “Nuclear Weapons and the ‘Gray Area,’” Foreign Affairs, 57, 5 (Summer 1979).